aesthetic perception

combining analog and generative

We are influenced in our aesthetic perception by the forms of nature. Today, we can synthesize these forms and textures algorithmically to a certain extent. But we can also think about how the two can work together.

aNa is an approach about inserting textures and shapes from the natural environment into computer generated graphics. aNa tries to reduce the difference between analog video technology and digital animation.

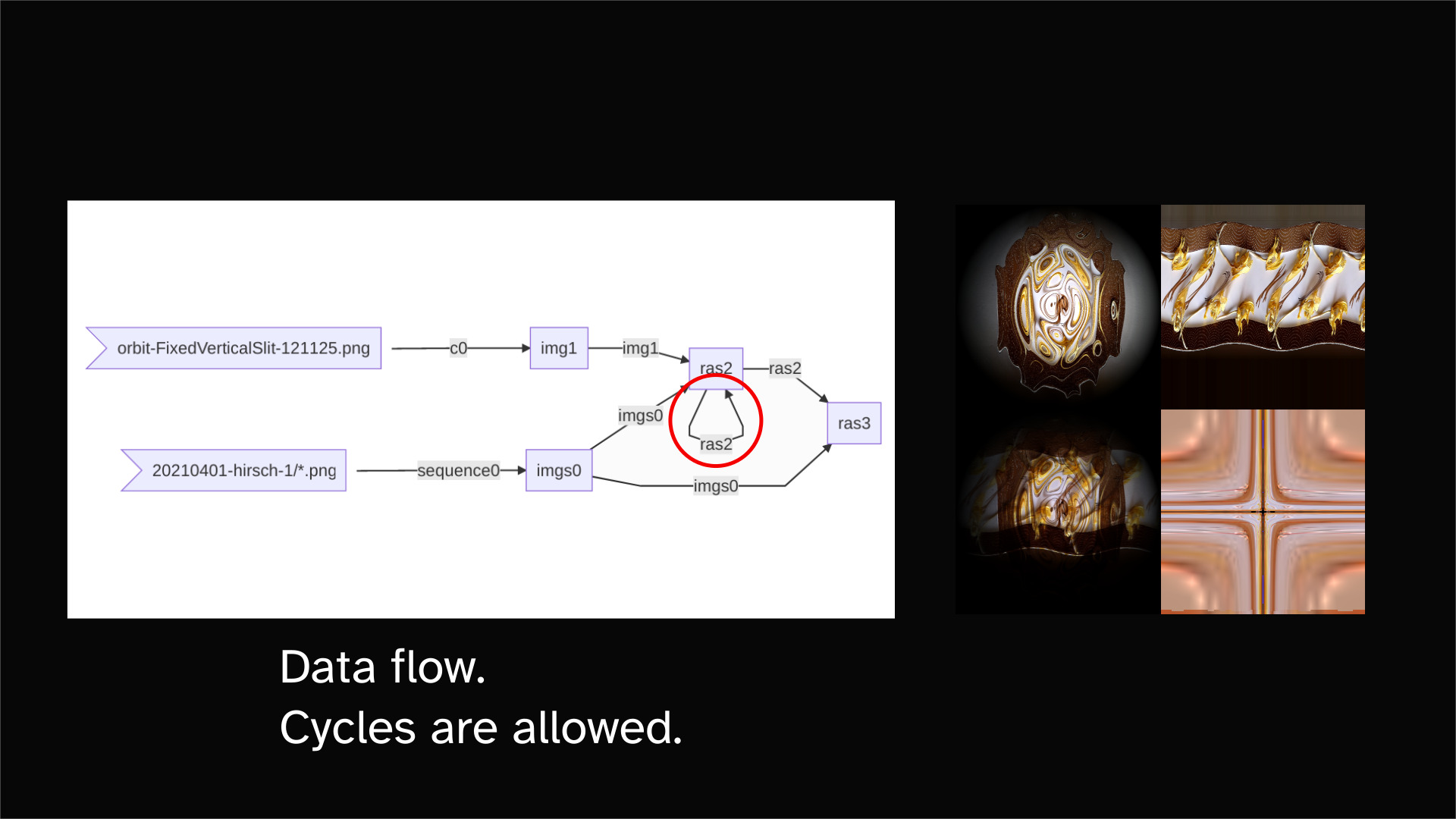

The other principle comes from analog video post processing technology. The image data flows through several filters and is modified in this process. In this case not by analog electronics but by image manipulations which are done algorithmically directly on the graphics card. The filter pipeline may contain feedback loops. This leads to effects that are difficult to control, but provide surprising visuals.

The basic principles

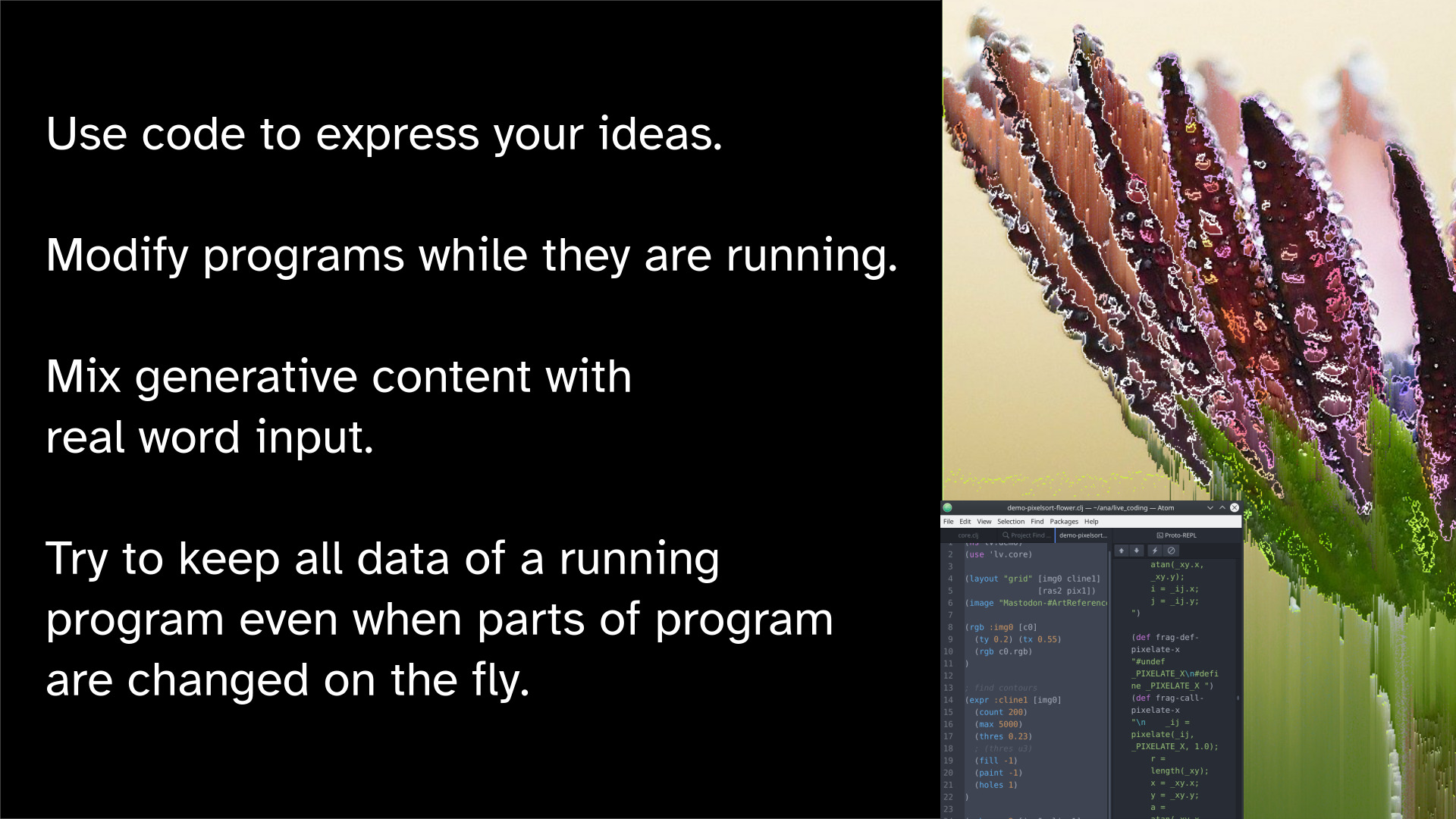

The basic principles are:

- Use code to express your ideas.

- Modify programs while they are running.

- Mix generative content with real word input.

- Try to keep all data of a running program even when parts of this program are changed on the fly.

Feedback loops / analog & digital

Analog feedback loops are an artistic technique that emerged in the late 60s. What happens when I point the analog VHS camera at a monitor that displays the camera’s image? What happens when a person views their image on a monitor but there is a time delay between the camera and the monitor?

Technically, these ideas cannot be taken exactly from the analog world to the discrete digital world. But these ideas have influenced my approach. Hence the name “analog Not analog”. I want to achieve optical impressions like the ones you know from the analog world. But the glitches are made digitally.

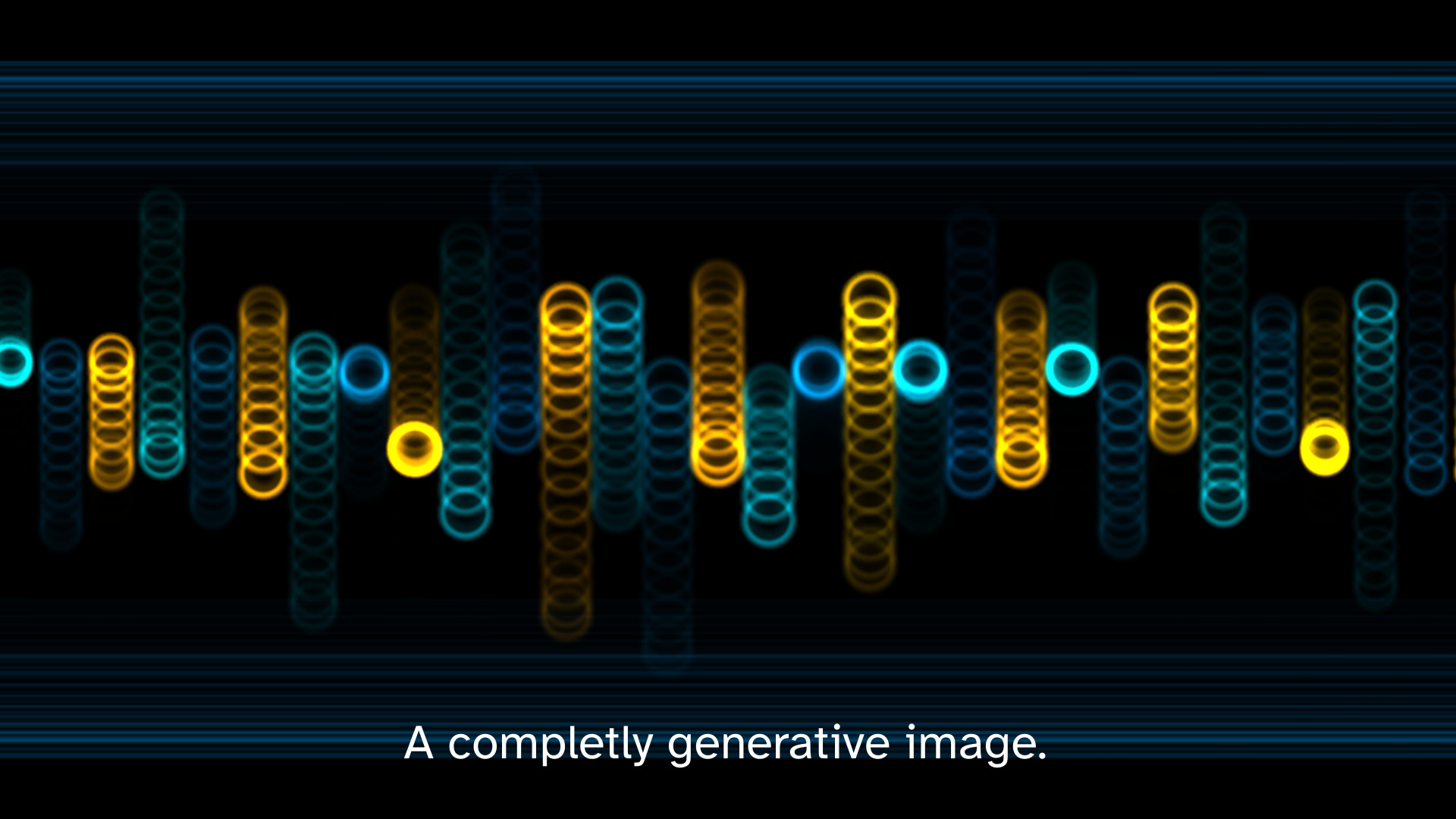

Algorithmic art

Algorithmic art leads to an overall appearance that is often too sterile. I am looking for something that breaks these mathematical clean images. Think the image below looks cool in this color contrast. But something is missing: There is no dirt in the picture.

There are different approaches to avoid too clean images:

-

A) Take images and sound material from the real life into the system and them mixed with the generative material.

-

B) Use for the output, an unreliable medium. Think of a cheap pen plotter that draws a fluttered felt pen across the paper.

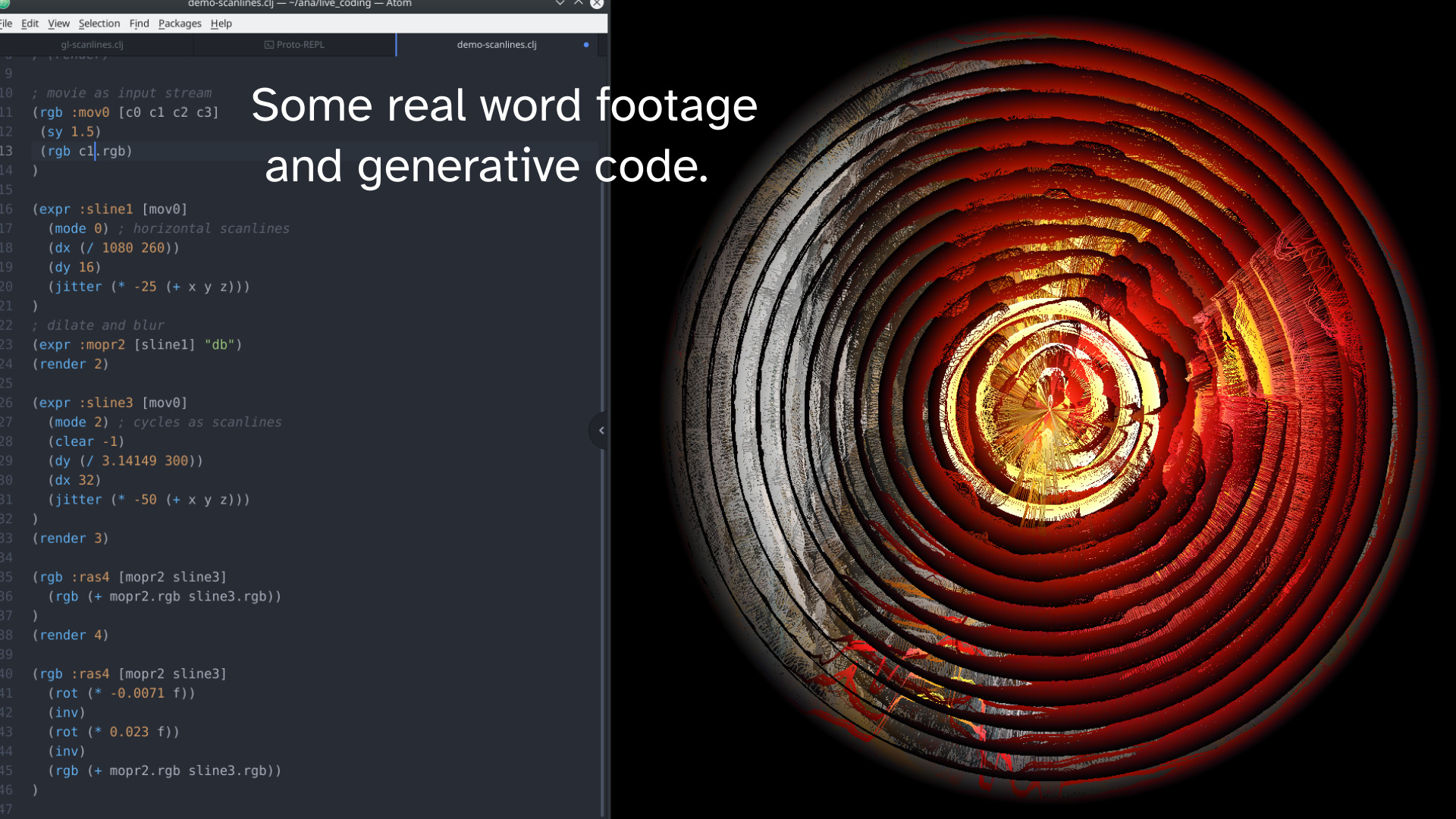

Footage from the real world

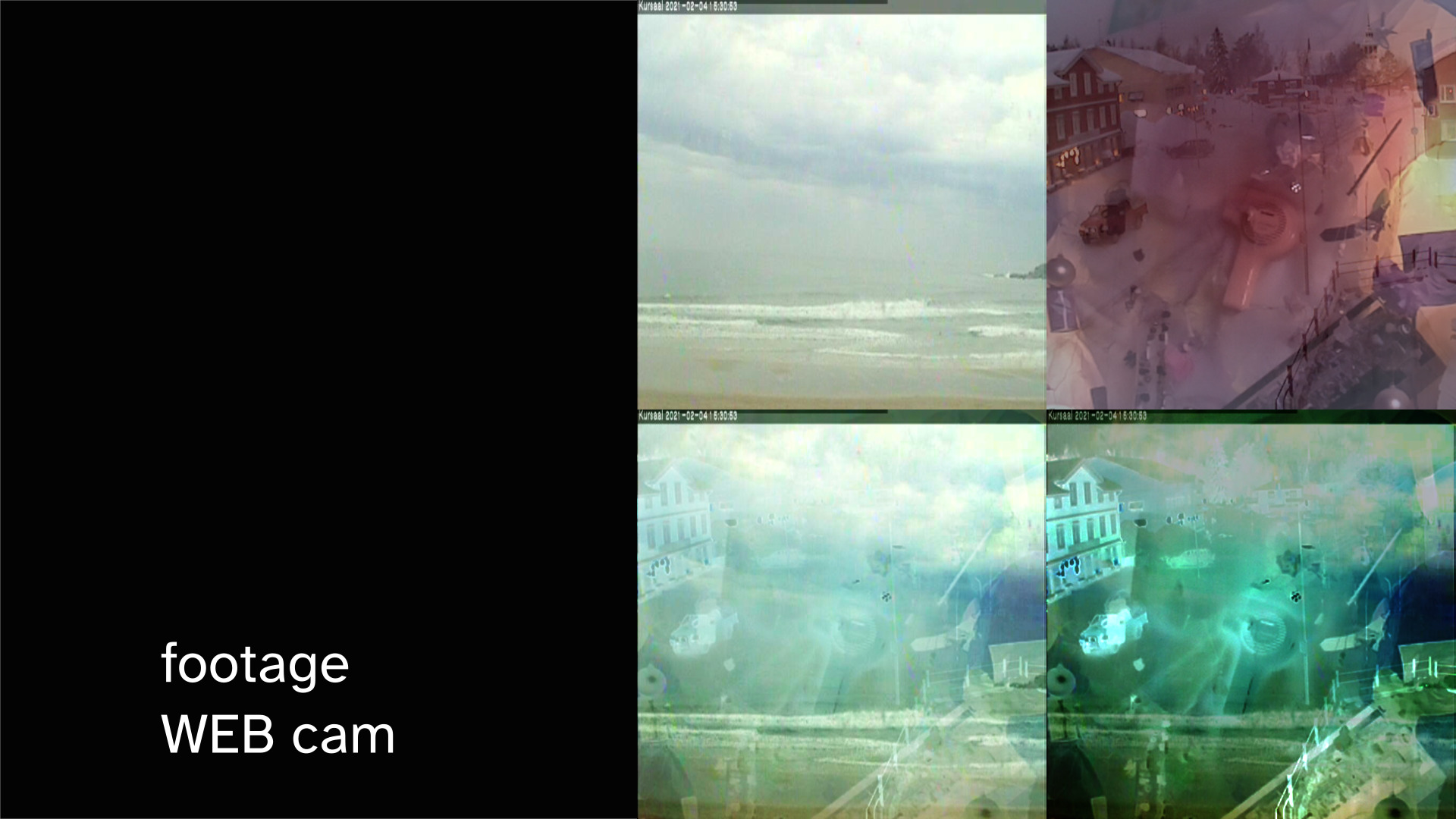

aNa works according to approach A. Reading form WEB Cams, movie files and sensors to break the clean look of a mathematically produced graphic.

This picture series was taken from a film showing cars driving at night. You seen here all of the involved frame buffers. The original video has been massively post-processed by an algorithm. But because the initial material is from the real world, it doesn’t look so smooth. That makes it interesting.

Here three WEB cams are superimposed. What the cameras deliver for images or light situations can not be influenced. The result looks like a serendipity.

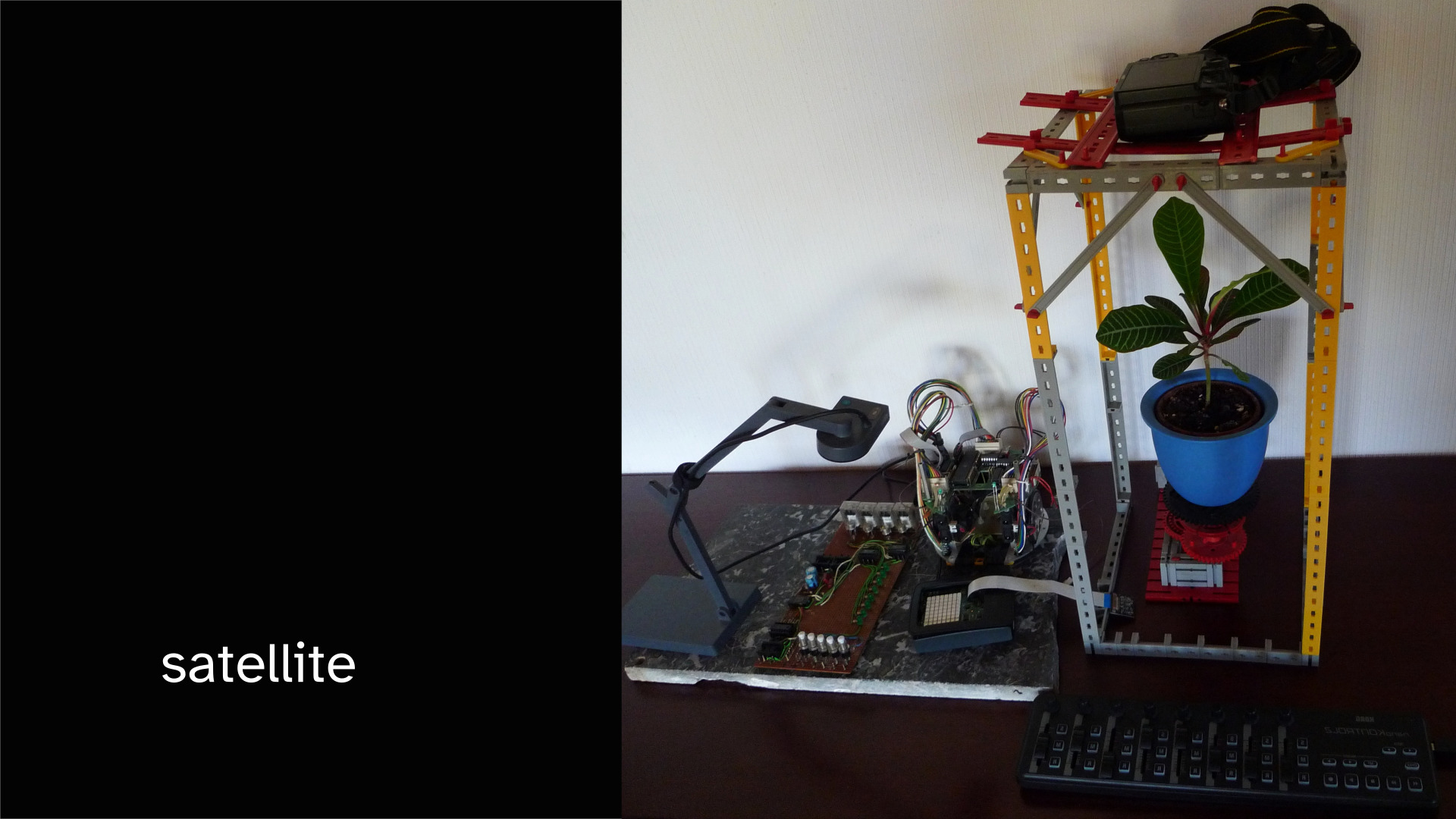

Studio

And I have a small studio. It consists of lamps, controllable LEDs, the sensors of a toy robot, turntables and a camera.

For example, I use an electric motor to make this plate and the decoration rotate slowly. A WEB camera films from above. aNa then distorts the movement to make slit scan effects.

Sensors and Input Channels

Over time, I have collected quite a few sensors. But it is still an open construction site to use them. They come from different times. Some work completely analog. Others are already integrated in a digital embedded system.

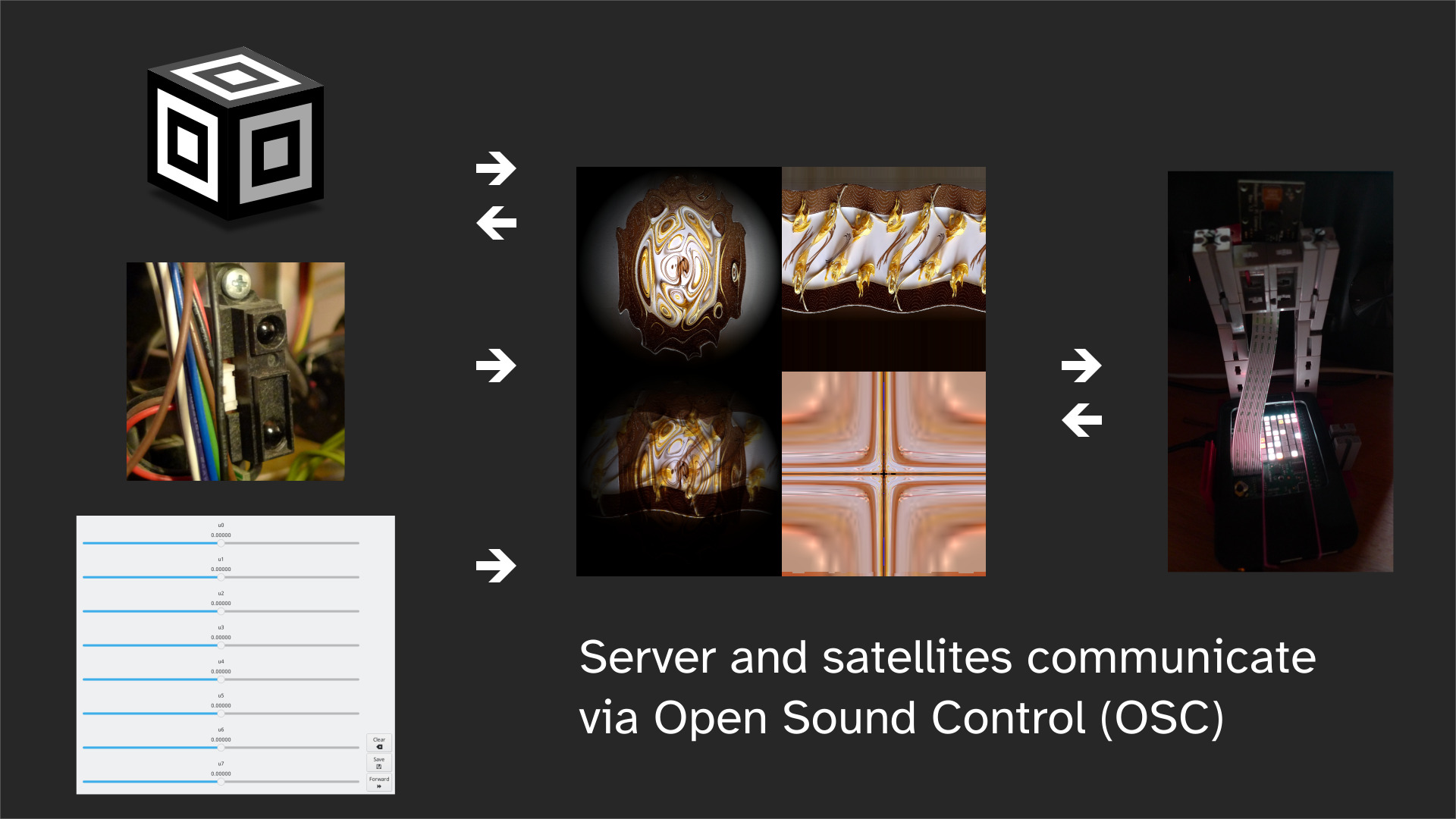

Satellite

There can be different small gadgets orbiting around the visualization server. I called them satellites. For example a Raspberry Pi can be used as input or output device.

A senseHAT has 8x8 LED matrix that can be controlled by OSC messages. In this case these messages are send per frame from the visualization server to the Raspberry Pi.

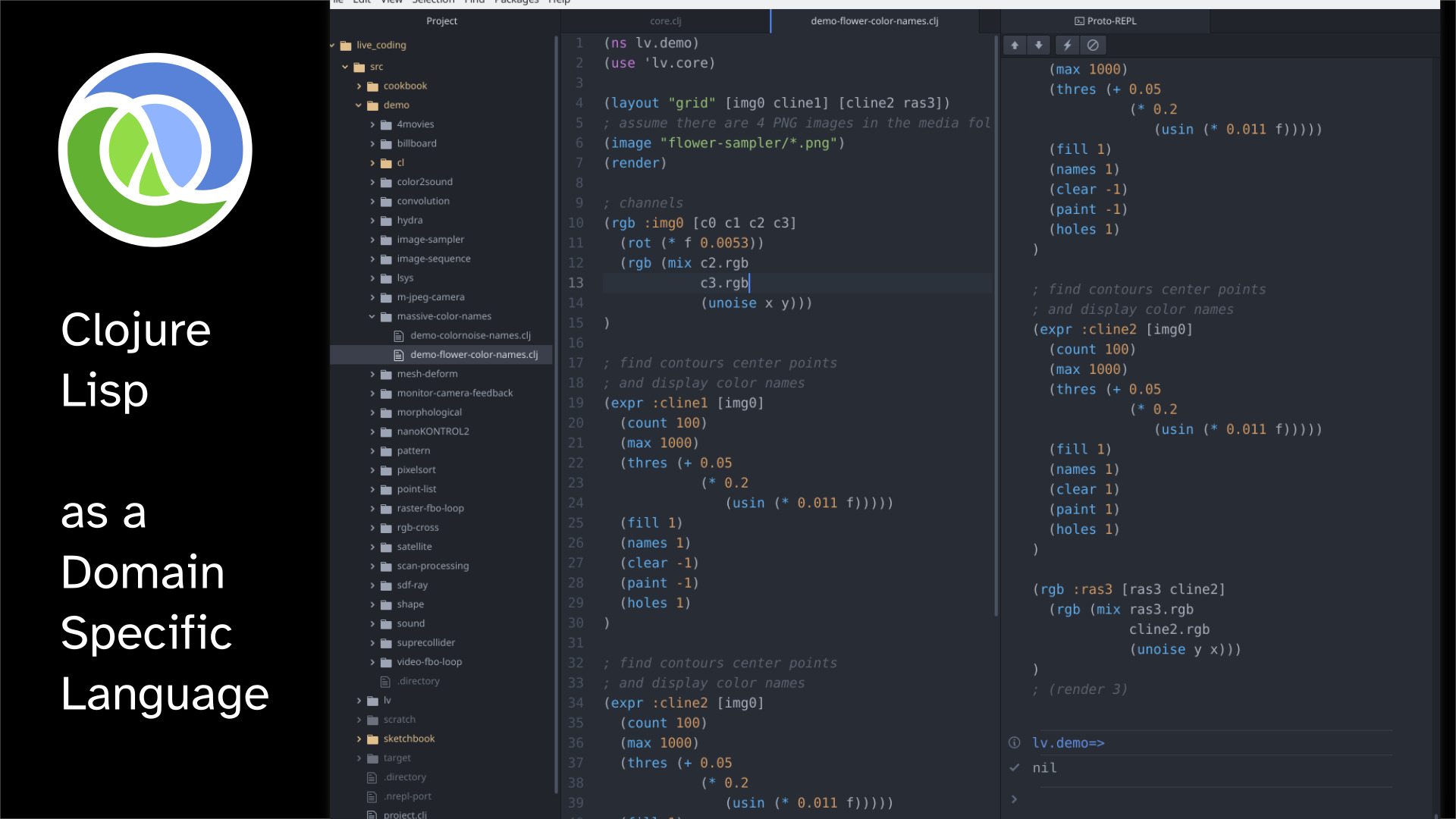

Lisp

Lisp is not expected in this video processing area. Why I use it I want to explain here a little bit more detailed:

So when we first look at a Lisp program we see all these brackets. We don’t see any of the structural elements of a programming language that we are using today. WHILE, CLASS, new(), return …

But the interesting thing about Lisp is that it can be tailored exactly to a specific task. What you see here in the editor and think is a “program” is the data for a program that is one layer down. There is no difference in Lisp between data and program. This transformation goes through several stages.

The lines of source code that are visible in the editor are mapped to an OpenGL shader code, or transformed to byte code, or used to load predefined C program into the graphics card.

I use the macro system of the programming language Lisp. Lisp is very good at building domain specific languages (DSL). And the many brackets always remain.

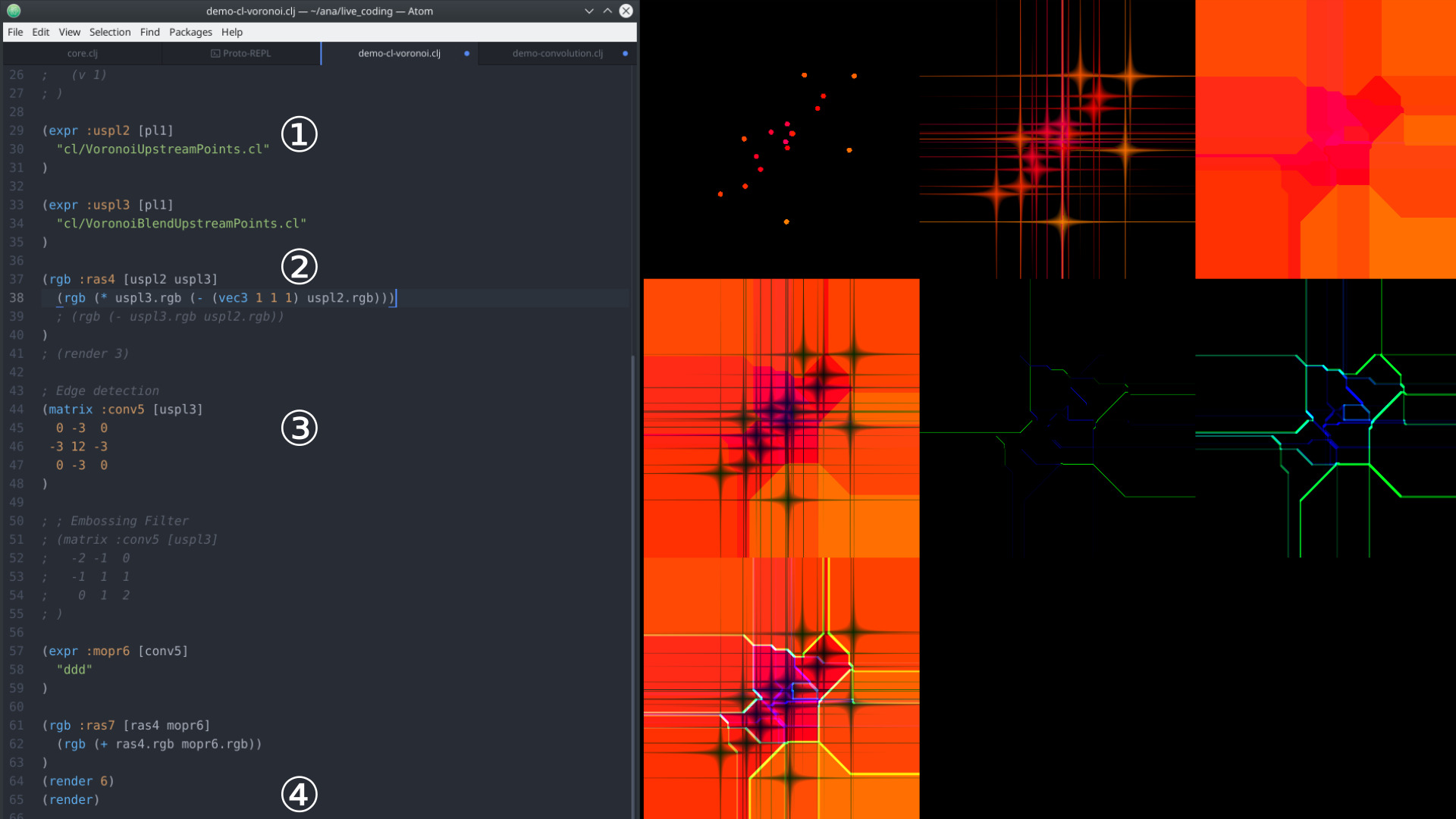

To the example program:

To the example program:

- Links to predefined C code that is executed directly on the graphics card.

- Becomes a fragment shader source code and then compiled by the GLSL compiler. All just in time

- Set parameters in a filter. Parameterize the C++ code, or the fragment shader code.

- Controls which frame buffer is shown on the display.

The second reason is: You can always change a running Lisp program. Without having to restart it or losing the data. Java offers this hot code replacement for debugging. But with Lisp it is a bit different. It is part of the basic concept of this programming language. It is not an additional feature for debugging only.

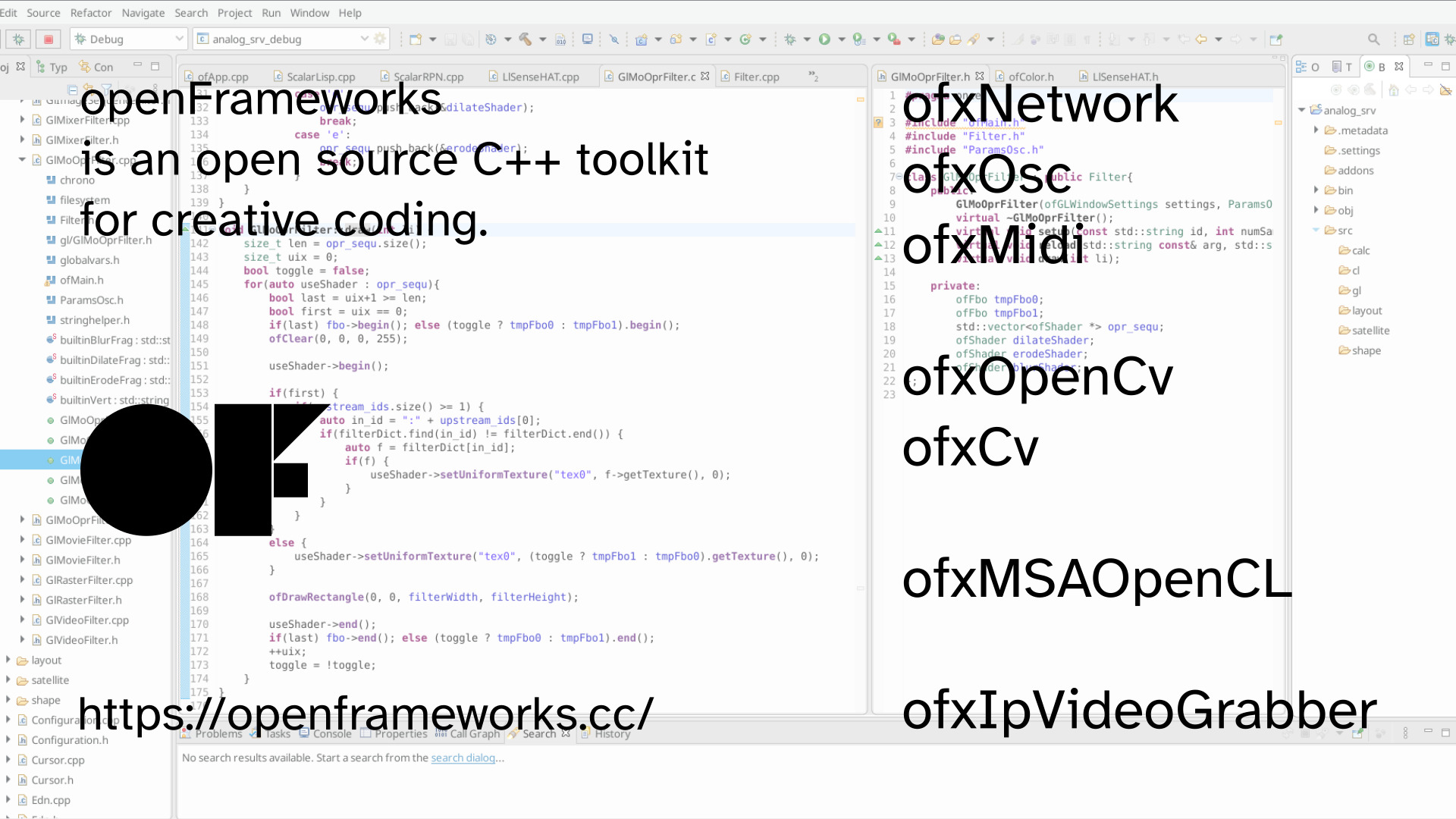

openFrameworks

For the visualization I need a lot of computing power. Also I want to avoid a software stack with many managed layers. Therefore C++, openFrameworks, OpenCL, GLSL Shader …

Inter process communication

For inter process communication I use OSC.

The software components used in aNa are very inhomogeneous. It is the protocol with which the various components work together.

- Clojure is based on Java.

- The editor Atom is written in Javascript.

- The visualization is native C/C++ code.

- On the Raspberry Pi the sensors and LEDs can be operated in Python.

- SuperCollider has its own domain specific language.

Therefore a protocol and no API to keep the system together.

Collaboration

Using a protocol instead of an API sounds complicated.

But because the components are coupled via an IP protocol, aNa also runs on a decentralized system. For example, the visualization server can run on a dedicated high performance computer. Several laptops with an live coding editor can be connected. A number of people can collaborate during a performance.

Links

-

Repository for analog Not analog: https://gitlab.com/metagrowing/ana

-

Social media: https://chaos.social/@kandid